Respecting low-level components of content with skip connections and semantic information in image style transfer (CVMP’19)

Man M. Ho, Jinjia Zhou, Yibo Fan.

[ DEMO Video], [Comparison Video]

This repository is to support our Paper/DOI: https://doi.org/10.1145/3359998.3369403.

News

| Date | News |

|---|---|

| 2020/04/04 | Code and trained models now available. |

| 2019/12/15 | Add additional results. |

Environment Setup

We run our DEMO on Anaconda Environment with following packages:

- OpenCV

- PyTorch >= 1.1.0

- Qdarkstyle

- PyQt5

- Numpy

- gdown

Get started

Clone this repo:

git clone https://github.com/minhmanho/respecting_content_ist.git

cd respecting_content_ist

Download models

bash ./models/fetch_models.sh

Stylize a folder of images:

python run.py --img_folder <folder_dir> --model <model_dir>

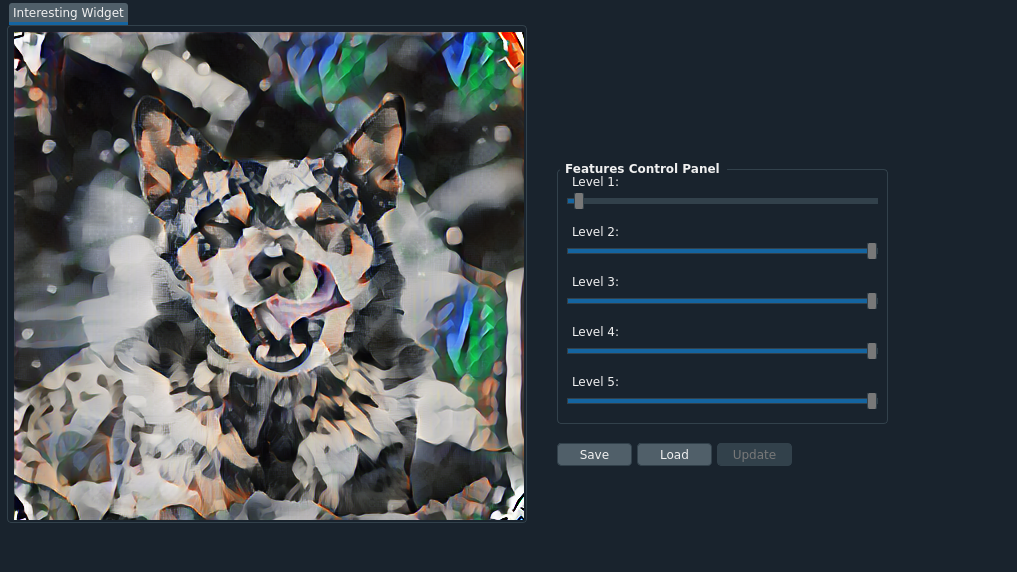

Run GUI DEMO:

python run.py --img <img_dir> --model <model_dir> --auto_update

If you have a powerful GPU, let’s add –auto_update for the better experience. The application will update every change you make automatically if auto_update is activated; otherwise, you have to click the button [Update].

Addtional comparison results

Content

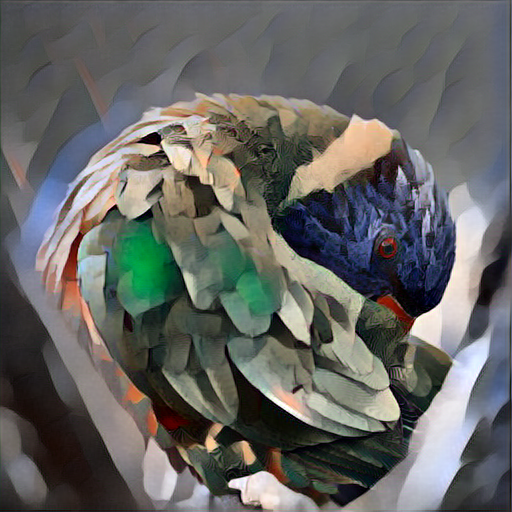

Most of the content images are collected from Unsplash

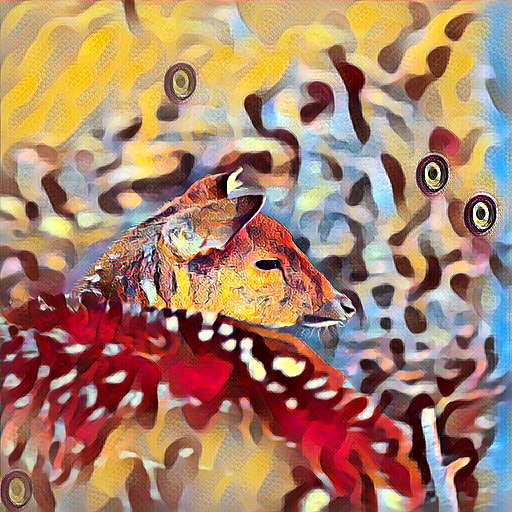

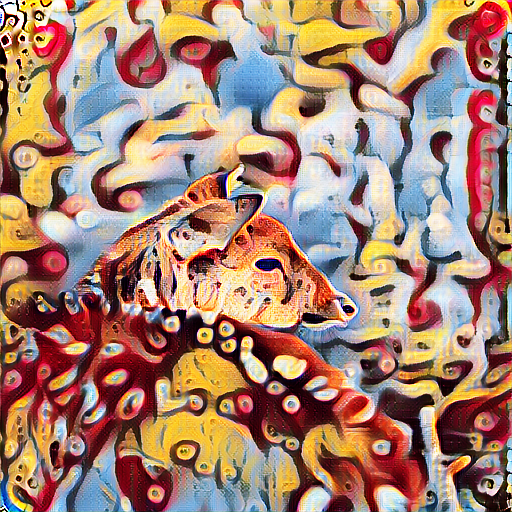

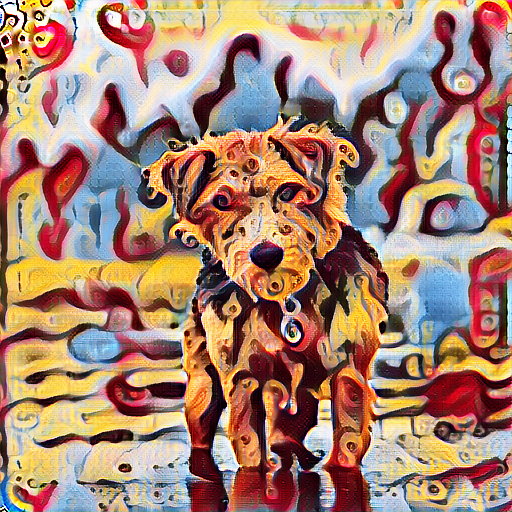

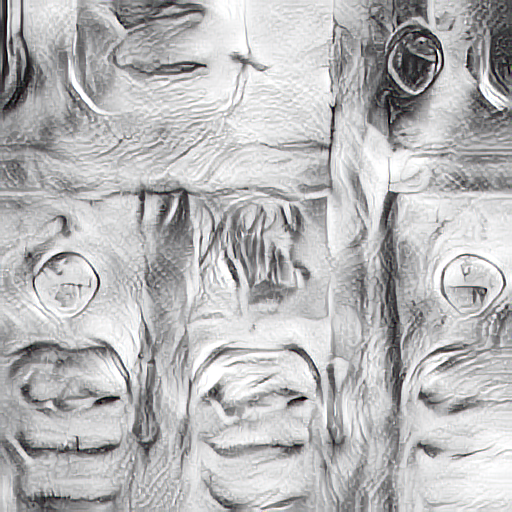

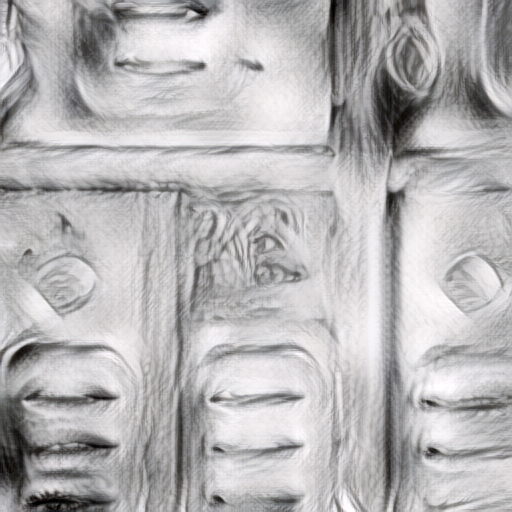

Compare to Johnson’s work + Instance Normalization

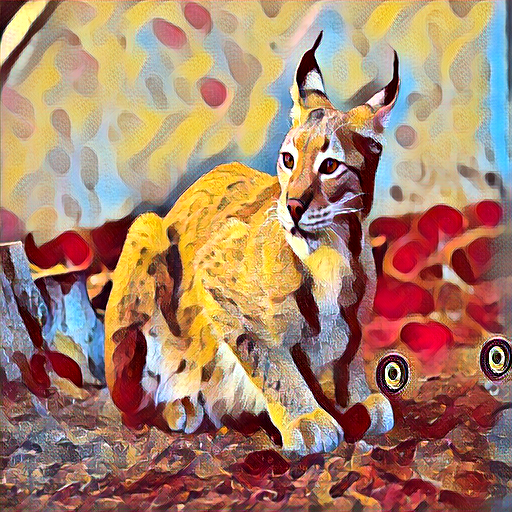

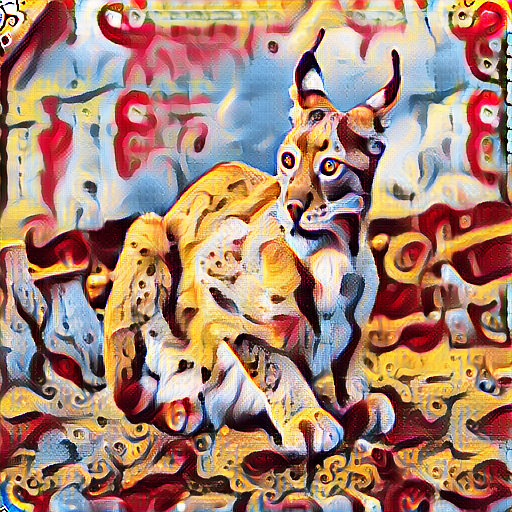

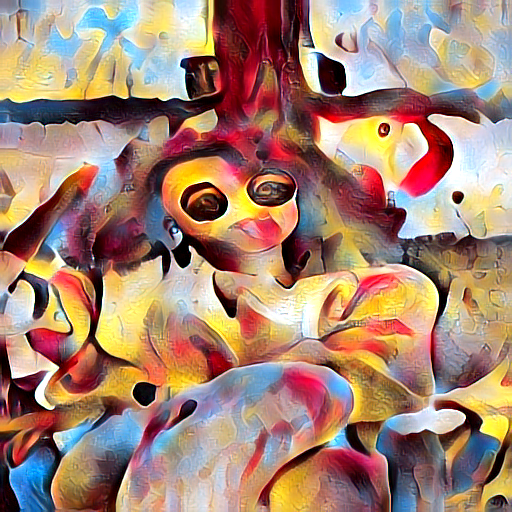

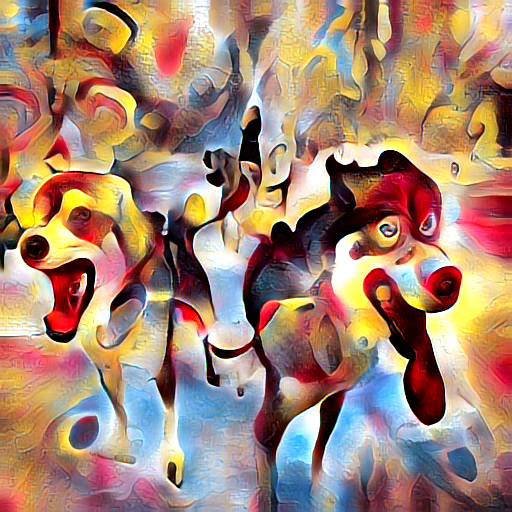

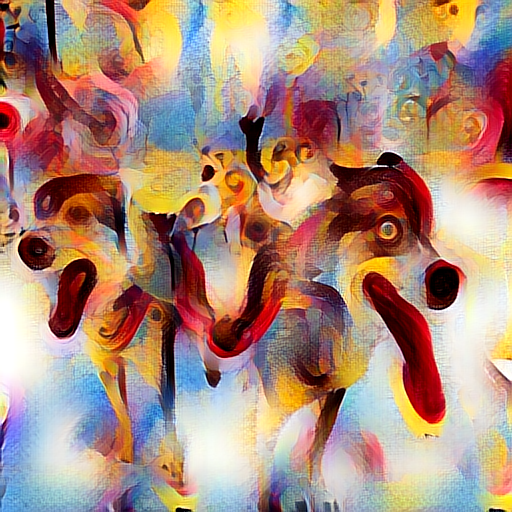

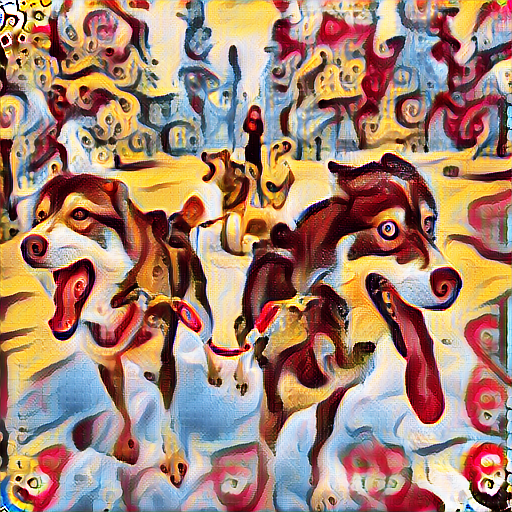

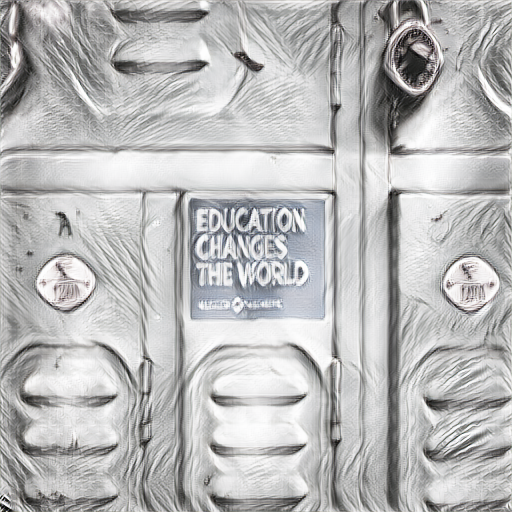

[Their work] [Ours] [Their work] [Ours]

: Candy

: Candy

: Udnie

: Udnie

Compare to recent works

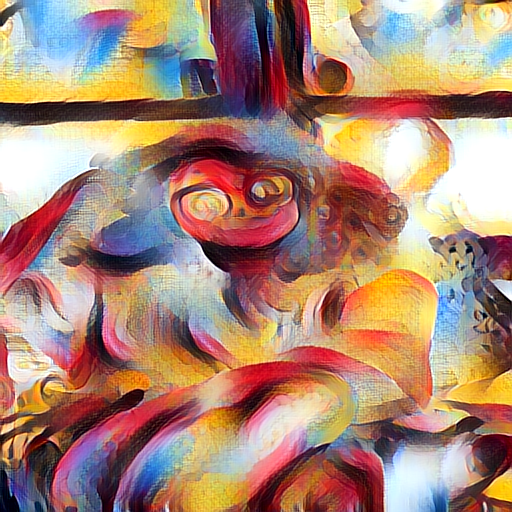

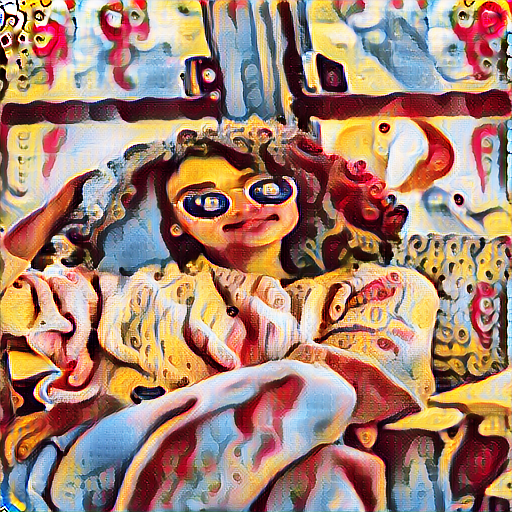

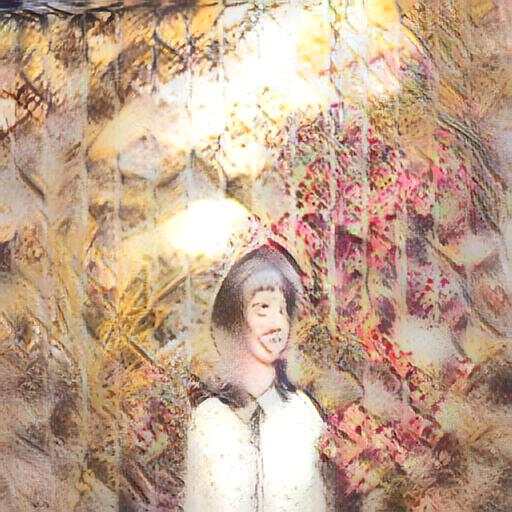

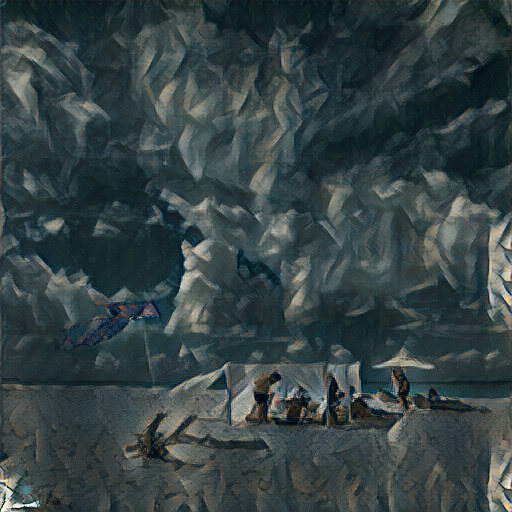

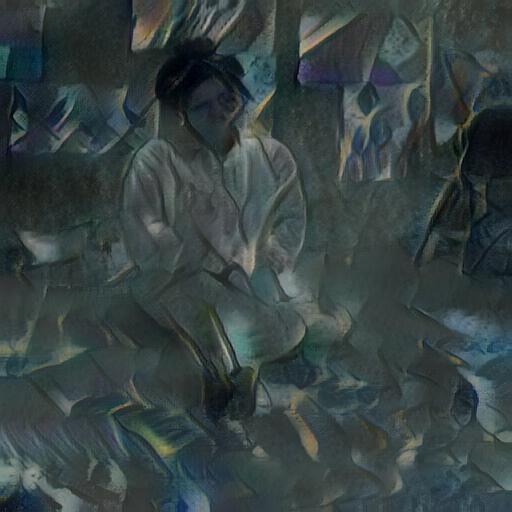

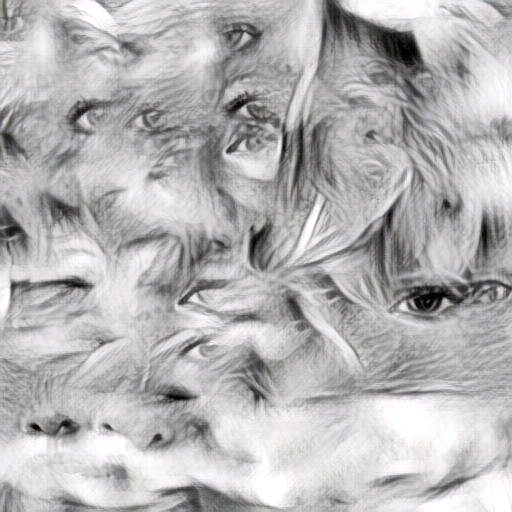

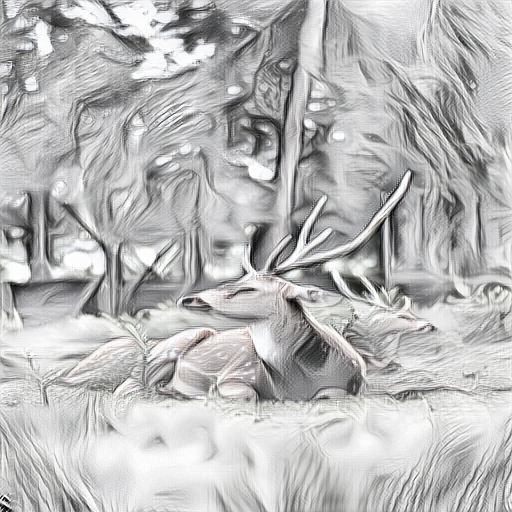

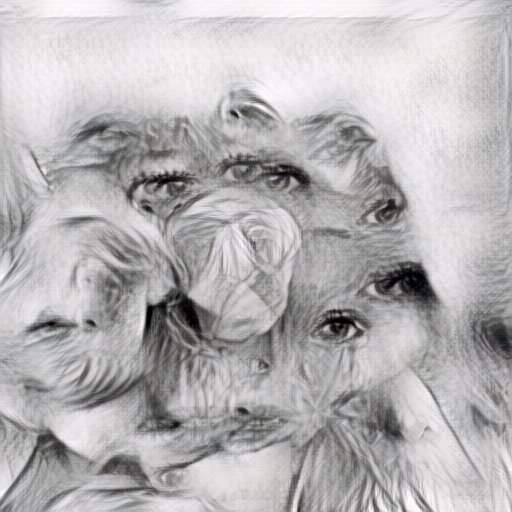

[AdaIn] [WCT] [AvatarNet] [Ours]

: Candy

: Candy

: Watercolor painting portrait of a woman

: Watercolor painting portrait of a woman

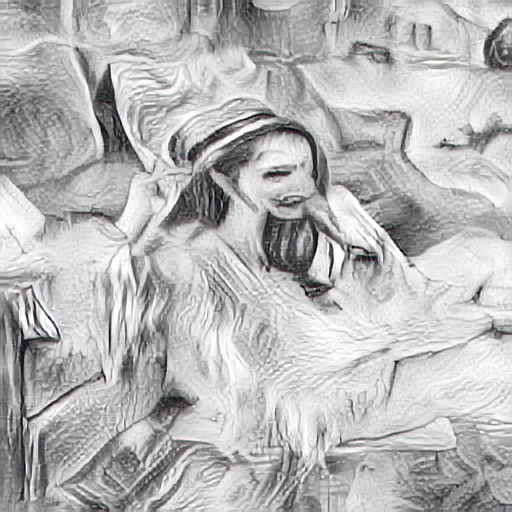

: Seated Nude by Pablo Picasso

: Seated Nude by Pablo Picasso

: Watercolor painting of rabbit

: Watercolor painting of rabbit

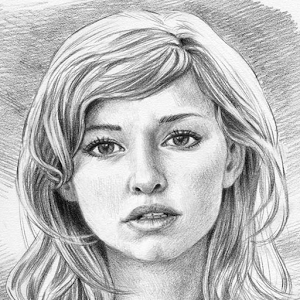

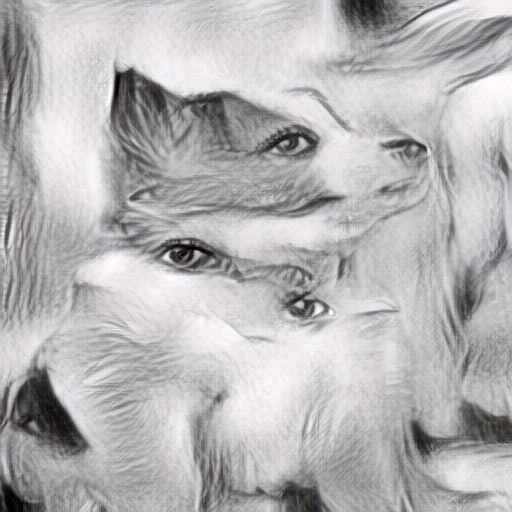

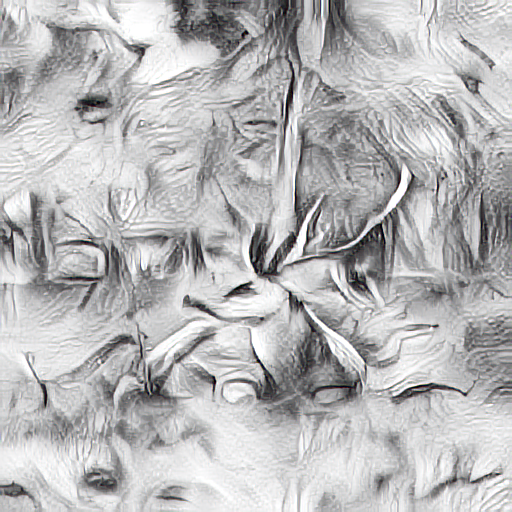

: A sketch

: A sketch

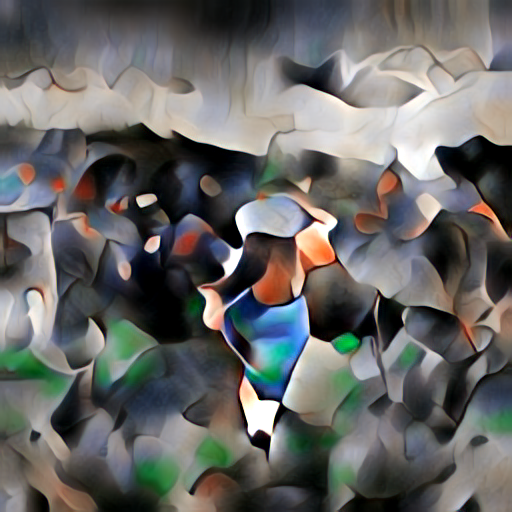

: Udnie

: Udnie

License

This repository (as well as its materials) is for non-commercial uses and research purposes only.

Cite

@inproceedings{ho2019respecting,

title={Respecting low-level components of content with skip connections and semantic information in image style transfer},

author={Ho, Minh-Man and Zhou, Jinjia and Fan, Yibo},

booktitle={European Conference on Visual Media Production},

pages={1--9},

year={2019}

}

Contact

If you have any suggestions, questions, or the use of these images infringe your copyrights/license, please contact me man.hominh.6m@stu.hosei.ac.jp. I will take action ASAP.

Acknowledgements

We refer the Interactive Colorization to build our demonstration. This work is supported by the State Key Laboratory of ASIC & System, Fudan University, China.